Tesla Ordered to Pay Over $240 Million After Fatal Autopilot Crash, Jury Rules

A Florida jury has ruled that Tesla holds partial responsibility for a deadly 2019 crash involving its Autopilot system and has awarded nearly $242.5 million in total damages to the victim’s family and an injured survivor.

The jury allocated 33% fault to Tesla, making the company liable for around $42.5 million in compensatory damages. In addition, they slapped Tesla with $200 million in punitive damages, placing the total payout significantly above $240 million. Legal experts note that punitive damages, when assessed solely against one party, often fall entirely on that entity — as in this case.

The case stems from a crash in Key Largo, Florida, where George McGee, driving a Tesla Model S with Enhanced Autopilot activated, looked away from the road while reaching for a dropped phone. The car surged through an intersection at over 60 mph, striking a parked vehicle and hitting two people nearby. The impact killed 22-year-old Naibel Benavides and severely injured her boyfriend, Dillon Angulo, who suffered both physical and cognitive trauma.

Attorneys argued that although Tesla marketed Autopilot as a superior alternative to human driving, the system lacked restrictions that would prevent use on roads it wasn’t designed for. The plaintiffs claimed this contributed directly to the crash.

In response to the verdict, Tesla maintained that the driver’s actions — including speeding and overriding Autopilot — were the sole cause. The company criticized the decision, calling it a setback for innovation and vowed to appeal the outcome, citing “major legal missteps” during the trial.

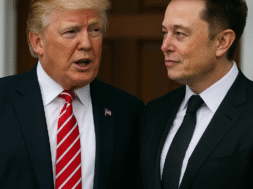

The timing of the verdict is crucial, as Tesla’s CEO continues to push for broader public acceptance of self-driving tech, promising a future of autonomous robotaxis. However, this ruling could influence the outcome of dozens of similar lawsuits currently underway across the U.S., which also center on incidents involving Tesla’s Autopilot and Full Self-Driving (Supervised) systems.

Meanwhile, federal transportation authorities are actively probing the effectiveness and safety of Tesla’s driver-assist features. Previous investigations have led to software adjustments, but regulators continue to evaluate whether these measures have truly reduced risks — particularly in scenarios involving stationary emergency vehicles.

Safety watchdogs have also cautioned that Tesla’s branding and social media messaging may give drivers a false sense of security, implying more automation than the vehicles are actually capable of. As of now, over 58 fatalities have been linked to crashes where Tesla’s Autopilot was reportedly active just prior to impact.